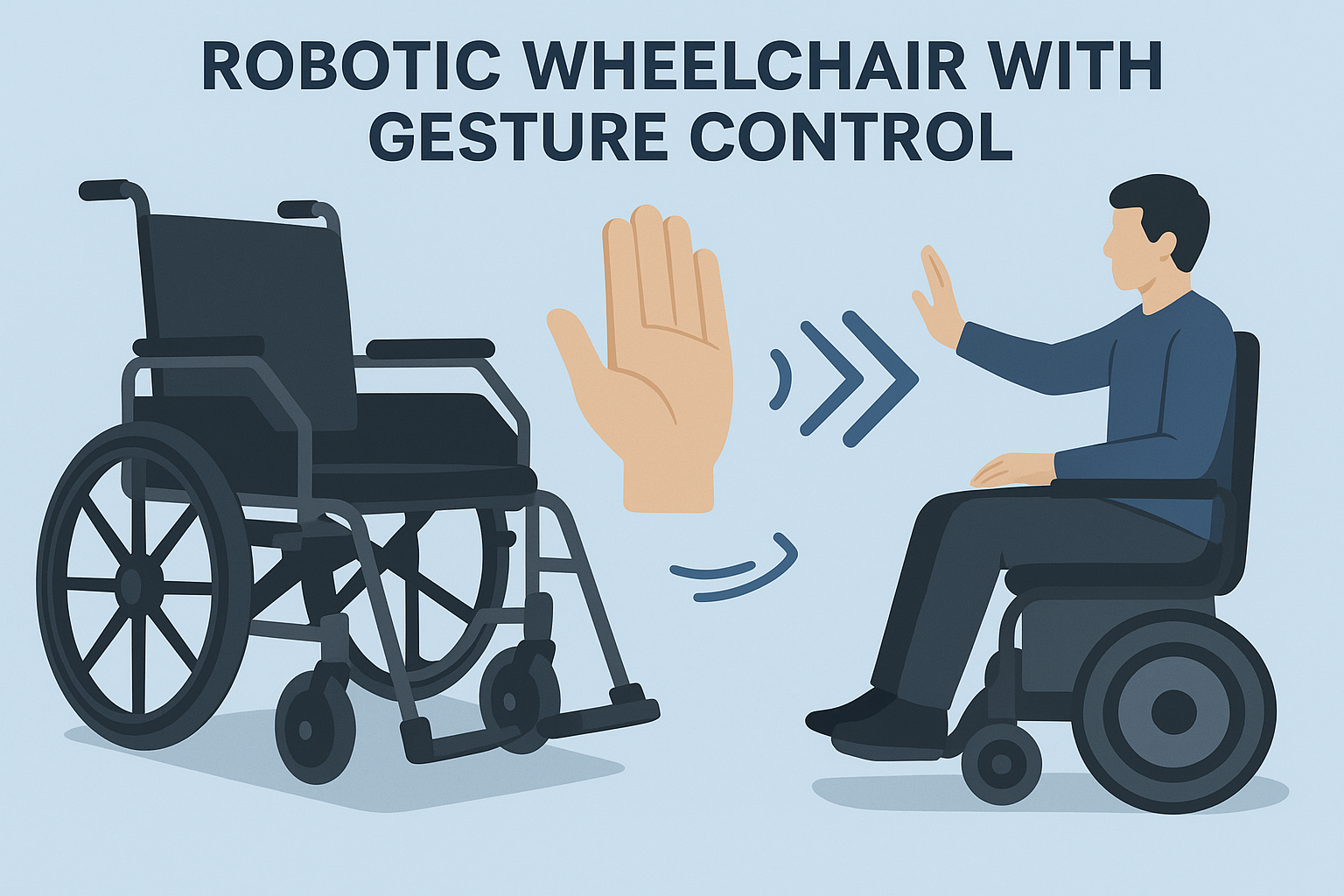

This next-generation mobility solution is designed to enhance independence and accessibility for individuals with mobility impairments. At its core is a smart wheelchair that responds intuitively to hand gestures and voice commands, allowing for seamless and user-friendly navigation without traditional controls.

To ensure accuracy and responsiveness, the system leverages deep learning for real-time gesture classification, enabling fast and reliable interpretation of user inputs. Additionally, it integrates advanced computer vision and IMU-based motion tracking to deliver precise movement control, even in dynamic environments.

By combining AI, robotics, and assistive technology, this project offers a smarter, more inclusive mobility experience. It not only improves the ease of movement for users but also represents a significant step forward in creating intelligent, human-centric assistive devices.